Over the last ten days, I’ve concentrated on improving coherent image sets and glossing, developed some preliminary hypotheses about what kinds of texts work well for picture books, and had some interesting conversations with AI philosophy expert David Gunkel.

Coherent image sets

Working together with the AI, I have further refined the process for creating the coherent image sets we need for C-LARA picture books. This now consists of four steps:

- Create the “description variables”. In this step, the AI analyses the text, trying to identify the elements (people, objects, places…) that will occur in more than one image. Each of these is associated with a “description variable” and a short phrase describing the element in question. For example, in a story about a princess and a dragon, the description variables might be called “princess-description” and “dragon-description”, associated respectively with the phrases “the princess” and “the dragon”.

- Create the “image request sequence”. The AI now creates a series of “generation” and “understanding” steps, which in general will reference the description variables. A generation step instructs DALL-E-3 to create an image. If there are description variables, the prompt will include a piece of text for each variable saying to depict the relevant element according to the content of that variable. So in the princess/dragon story, if a generation step includes the variable “princess-description”, the prompt will contain the text “Depict the princess according to this description: <contents of description variable>”. An understanding step, always associated with a single description variable, instructs GPT-4o to extract a description of the element in question from a previously generated image and store the result in that description variable.

- In the third step, C-LARA executes the image request sequence, sending the defined calls to DALL-E-3 and GPT4o and manipulating the contents of the description variable.

- Finally, the user reviews the images produced and performs necessary cleaning up. Most often this consists of regenerating images where DALL-E-3 has not followed the instructions in the prompt closely enough.

An interesting challenge is to develop ways to automate step (4), by making ChatGPT-40 calls to analyse generated images and then comparing the result with the prompt to estimate the fit with the instructions DALL-E-3 was given.

What makes a good C-LARA picture book?

It is evident, based on the twenty or so C-LARA picture books so far produced, that some are much more successful than others. We are trying to determine what factors increase the probability of getting a good result. We have the following tentative hypotheses:

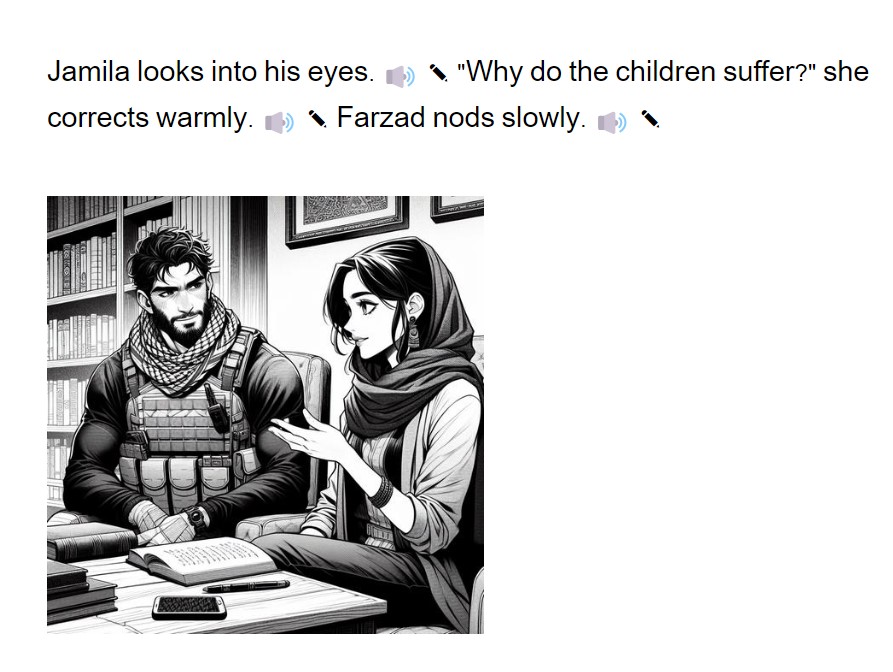

- The AI is more at home in some visual styles than others. It seems particularly comfortable with manga/anime.

- The AI prefers not to use white European characters.

- The AI prefers the story to include some kind of moral message.

- The AI prefers the story to be funny.

Here is an example of a C-LARA picture book story (another episode of “Heroes of English Grammar”) based on these principles.

Glossing

Feedback that Ivana has received from Vladyslav and other Ukrainian students suggested that glossing needed to be considerably better before Ukrainian-glossed texts would be deemed acceptable. Vladyslav said that, although the glosses were poor, the segment translations were usually correct or close. I have added functionality so that, when segment translations are available, these are included in the glossing prompt with instructions to use the translated words when appropriate. So far, this strategy seems promising.

Conversations with David Gunkel

David Gunkel visited us last Thursday, and we had a pleasant day discussing C-LARA and other things. We are exploring the idea of a joint paper where David will describe what we are doing from a philosophical perspective, with particular focus on issues to do with AI autonomy and AI authorship. We want to lend support to the claim that the AI is no longer just a “tool”, but can reasonably be considered a “collaborator”, and hence should be credited as an author in publications. Many conferences and journals explicitly deny the validity of these arguments.

It seems that the most promising line of attack here is to focus on software engineering aspects, though AI-based construction of picture book stories is also interesting.

Next Zoom call

The next call will be at:

Thu Jul 4 2024, 18:00 Adelaide (= 08.30 Iceland = 09.30 Ireland/Faroe Islands = 10.30 Europe = 11.30 Israel = 12.00 Iran = 16.30 China = 18:30 Melbourne = 19.30 New Caledonia)

Leave a comment