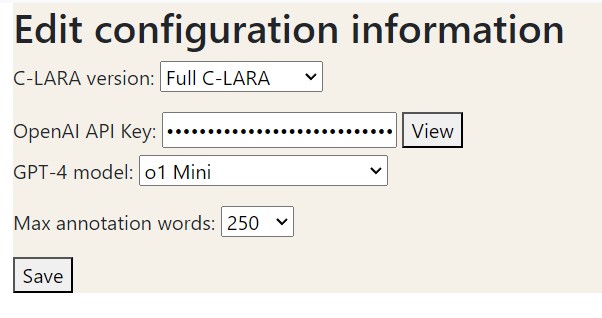

I have installed support for the two new OpenAI models o1-preview and o1-mini in C-LARA. They are based on Chain-of-Thought reasoning trained using reinforcement learning, and appear to be considerably smarter than gpt-4o. Y0u can select them from the Edit configuration information screen in the usual way:

Based on some preliminary testing, I would not recommend using o1-preview for anything except writing long or complex texts. It is very expensive! However, as you can see from the short story we posted the other day, it opens up some really interesting possibilities in the area of creative writing.

o1-mini seems affordable and costs perhaps two or three times as much as gpt-4o. My first impression is that it gives significantly better annotation performance, but it’s too early to be sure. I have started reannotating the examples from the ALTA 2023 paper, and we should know more soon. If other people would like to get involved, that would be great! It would be natural to include the results in the upcoming third C-LARA progress report.

Following a discussion with Belinda and Francis yesterday, I will soon add more options in the Edit configuration information screen so that you can choose the OpenAI model in a fine-grained way, specifying which model to assign to each task and offering some preset options with titles like “Cheapest” (gpt-4 for everything), “Default” (o1-mini where it will help, otherwise gpt-4) and “Best” (o1-preview for everything).

Leave a comment