We’re now close to the end of the current development cycle for C-LARA, and we should review our priorities before we start on the next one. This post summarises what we’ve done over the last 8-9 months, what we’re currently in the middle of, and what we might do next.

What we’ve done

Here are the main things we’ve done in C-LARA during the current cycle, roughly Apr 2024- Jan 2025.

Multi-Word Expressions. Almost all languages make heavy use of Multi-Word Expressions, combinations of two or more words whose meanings can’t be easily deduced from the component words; typical examples in English are phrasal verbs like “give up” or “turn in” and nominal compounds like “dead end” or “high rise”. During the previous cycle, we identified this as the major issue when carrying out annotation (glossing, lemma-tagging). We still aren’t doing it perfectly, it’s a highly non-trivial problem, but we’re getting much better performance.

Images. Many people said they wanted better ways to create images for C-LARA texts, in particular for making various kinds of picture books. We now have a systematic way to create sets of images which are at least roughly coherent. The AI first creates a style and descriptions for recurring visual elements, and finally uses these to generate the full set of images.

Parallelism. We now use parallel processing to speed up annotation and image generation.

Simple C-LARA. The above improvements, and some others (in particular, translations for segments) are now integrated into Simple C-LARA so that they are easy to use.

Using non-AI-supported languages. We have completely revised the infrastructure to support people working with languages the AI doesn’t know, in practice usually Indigenous languages. C-LARA provides more support, and there is functionality which allows community reviewing of images.

AIs as software engineers. As the AI models become more powerful, they have been able to play an increasingly active role in software development. The community image reviewing module, about 2K lines of code, was almost entirely written by OpenAIs’s o1.

AIs as authors. More powerful models are also able to take more responsibility for writing. We have posted two reports presenting substantial texts, 3-5K words, entirely written by o1 under human supervision. Echoes of Solitude is a short story; “ChatGPT is Bullshit” is Bullshit is a rebuttal of the high profile 2024 paper ChatGPT is Bullshit. In both cases we include a full trace of the process used to write the texts.

What we’re doing now

The new C-LARA functionality described above needs to be used for real-world tasks before we can make it stable. We have recently started two projects of that kind.

Picture books for Indigenous languages. Sophie has kicked this off, creating a C-LARA-generated picture dictionary for the Australian Indigenous language Kok Kaper. She is working together with the Kok Kaper community in Queensland, who are enthusiastic about the project. We hope soon to start on similar efforts for the Kanak languages Iaai and Drehu, working with Anne-Laure, Stéphanie, Fabric and Pauline.

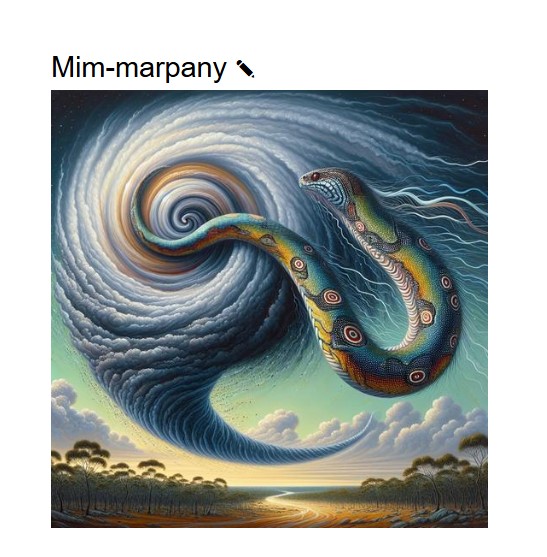

Draft page from the Kok Kaper picture dictionary. The word mim-marpany can mean either “cyclone” or “rainbow snake”.

English learning resources for Ukrainians. Together with Vladyslav, we have put together an initial set of ten English texts glossed in Ukrainian. We are polishing them now and will soon make them available.

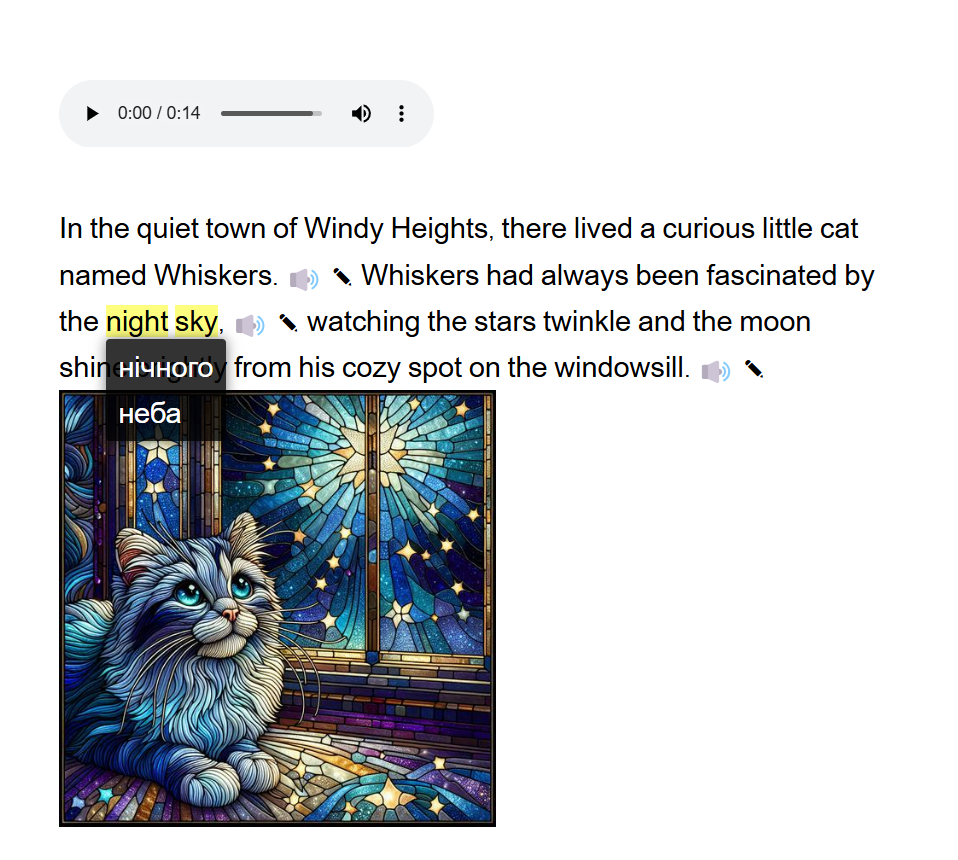

Draft page from the Ukrainian-glossed version of The Cat and the Bat, a children’s story written by C-LARA. Illustrations were created in a style intended to look like stained glass windows.

What we could do next

Current development of the platform’s functionality is largely driven by the requirements of the two real-world projects above. We need to do more work on making C-LARA easy to use with Indigenous languages and supporting accurate glossing in Ukrainian.

We also need to document the work we’ve done since last March. This will be presented in the form of the Third C-LARA Project Report, which we will start putting together soon.

Our next major challenge will be to move up to a higher level when using the AI to do the software engineering. The AI models are now good enough that it seems realistic to aim at letting the AI take responsibility for all or nearly all of the codebase, currently about 35K lines. In order for this to be possible, the first step will be to clean it up, and in particular to make it more modular; a very interesting question is how much the AI can help automate that process. Stay tuned.

Leave a comment