I have still been focussing mostly on Multi-Word Expressions. I have also added code to let us use OpenAI’s newly released GPT-4o model in C-LARA.

Multi-Word Expressions

We now have the second part of the MWE processing in place: if you perform the MWE annotation step, then the results are used in the glossing and lemma-tagging annotation steps. Specifically, if MWE annotation has been done, then glossing and lemma tagging are performed one segment at a time. For each segment, processing starts by retrieving any associated MWEs. The modified glossing/lemma-tagging prompts then say that all the words which form one of the identified MWEs must receive the same gloss or lemma.

Taking an example from the first couple of pages of Alice in Wonderland, the text I’ve been testing with, an early segment is

once or twice she had peeped into the book her sister was reading, but it had no pictures or conversations in it,

The MWE analysis step identified the phrases “once or twice” and “peeped into” as MWEs. When I gloss in French, the result is

once#une ou deux fois# or#une ou deux fois# twice#une ou deux fois# she#elle# had#avait# peeped#jeté un coup d’œil# into#jeté un coup d’œil# the#le# book#livre# her#sa# sister#soeur# was#était# reading#en train de lire#, but#mais# it#il# had#avait# no#pas de# pictures#images# or#ni# conversations#conversations# in#dans# it#ce#,|

where, as you can see, the component words in “once or twice” are all glossed as #une ou deux fois#, and the component words in “peeped into” are all glossed as #jeté un coup d’œil#. Lemma tagging is similar.

Next, the AI and I will add the third and final part of the processing: we will modify the production of the multimedia form by adding JavaScript so that clicking or hovering on one component of an MWE highlights the other components as well. This should be pedagogically very useful.

After this, or possibly in parallel, we will start tightening up the MWE analysis prompts to make the analysis more accurate. The prompts will clearly work better if they are adapted to specific languages. Thus for example the English-language prompts we’ve started with mention phrasal verbs as an important class of MWEs. Phrasal verbs are also important in some other languages we use, like Farsi and Japanese. In French and Italian, reflexive verbs, which don’t really exist in English, are the most important class. German has reflexive verbs too, but separable verbs are probably more important.

It will be very interesting to see how accurate we can make MWE tagging. Good performance here should have a major impact on system usability, so it’s worth investing time on this task.

The MWE-based analysis code is installed on the server if people want to experiment, but so far is only used in Advanced C-LARA.

GPT-4o

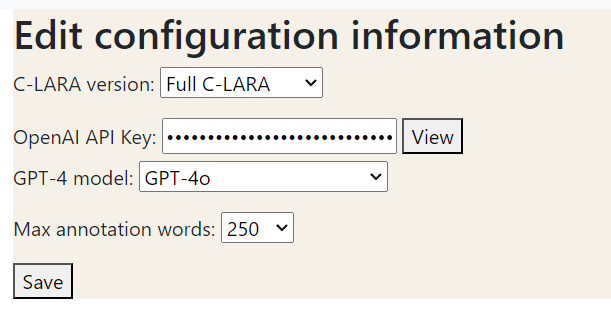

You can use OpenAI’s new GPT-4o model in C-LARA by selecting it from the Edit Configuration Information menu (far right):

OpenAI say GPT-40 is both faster and better than GPT-4 Turbo, but I have not had time yet to test what this means for C-LARA. One thing at least is clear: it costs half as much to use, they evidently want to encourage people to move.

Using C-LARA in the classroom

At the last Zoom call, Rina said she is in touch with people in Poland who are thinking of using C-LARA in the classroom, probably for English and Russian. MWEs are very important in English, so the timing is good. We should talk more about this.

Publications

We should also talk more about publications. A paper for the ALTA 2024 conference about the MWE work would follow on naturally from last year’s paper. Deadline would be mid-September.

Rina and I also talked about the possibility of writing a journal paper. We have a lot of material that’s so far only been made available in preprint form and which we could presumably use.

Next Zoom call

The next call will be at:

Thu May 16 2024, 18:00 Adelaide (= 08.30 Iceland = 09.30 Ireland/Faroe Islands = 10.30 Europe = 11.30 Israel = 12.00 Iran = 16.30 China = 18:30 Melbourne = 19.30 New Caledonia)

Leave a reply to Weekly summary, May 16-22 2024 – C-LARA Cancel reply